Data security is one of the key points enterprises will focus on in 2023. Due to the rapid increase in the number and severity of cyberattacks putting a large amount of key infrastructure at risk, enterprises can no longer afford to ignore security as something they can plan for later.

In this blog series, focused on data center security, we have so far covered topics of immediate concern, such as:

- The Current State of Private Cloud Security

- Implementing Zero-Trust Security in Private Cloud Environments

- Data Center Security Architecture and Workload Protection with VMware Cloud Foundation

In this entry, however, we will focus on the future and how infrastructure is evolving to provide better, more secure solutions for enterprise environments.

The Future is Hardware Accelerators

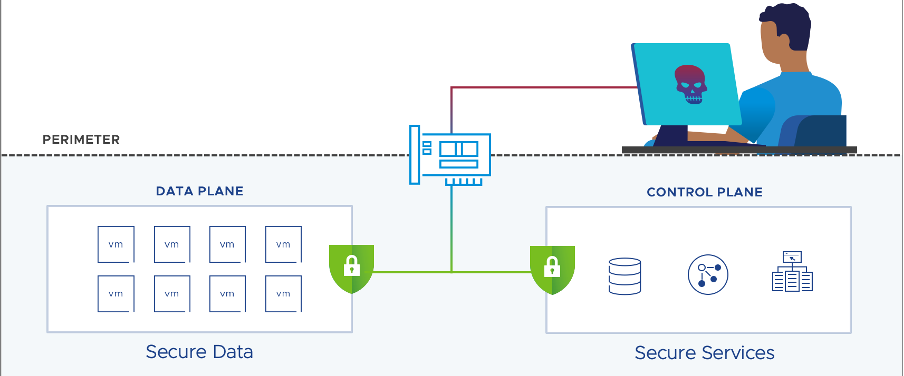

Customers (and cyber attackers alike) are aware of the security risks that currently challenge data centers and how they will continue to proliferate. Especially with applications distributed across many locations; In the last 5 years, the traditional perimeter security model has been completely shattered. Security now needs to be distributed broadly yet enforced locally. This means leveraging the proper hardware enforcement of those security policies and boundaries.

The goal for many organizations is to implement a zero-trust security model. CPU cycles are a precious resource that should be kept for workload demand, meaning there is room for offload accelerators to solve this demand for a distributed security model.

The main challenge of traditional hardware accelerators is that they can drive both CapEx and OpEx, depending on the deployment model. In a financial model where hardware accelerators cannot be leveraged for every host, there is an operational challenge of “which workload” and “which host”, and ops teams must constantly be mindful when deploying new applications. Also, the more inconsistent the data center architecture, the more exposed it is to security risks.

From a security standpoint, what customers need is both a consistent separation between a production, workload-focused security zone (that can be further segmented all the way to the individual workload) and a completely different network environment for how the environment is managed and how network services are offered. The perimeter now becomes the network card itself, and the separation, a physical one.

How DPUs and SmartNICs Change the Security Landscape

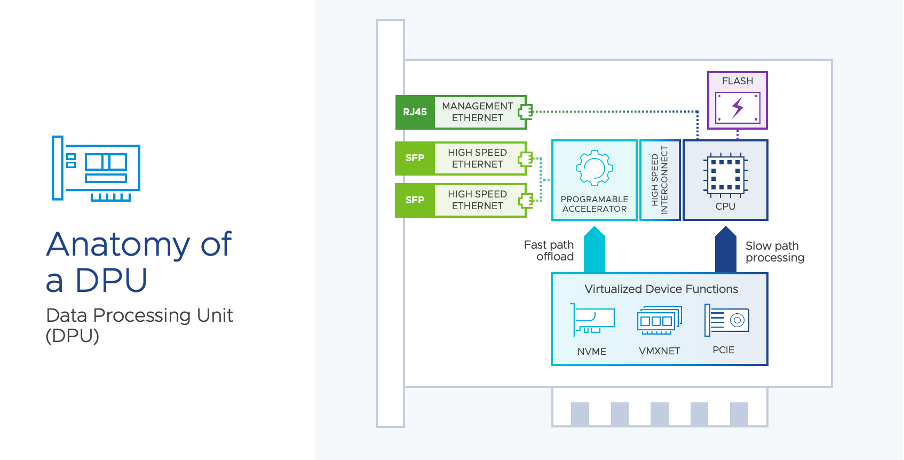

A SmartNIC (Network Interface Card) is a specialized type of NIC that offloads certain network processing tasks from the host CPU. This can include tasks such as packet filtering, encryption/decryption, and compression. SmartNICs are typically used in data centers and other high-performance computing environments to improve network performance and reduce the load on the host CPU.

With DPUs (Data Processing Unit) however, such as NVIDIA’s BlueField 2 DPU, the focus is more on a specialized type of processing unit that is designed to accelerate specific types of data processing tasks.

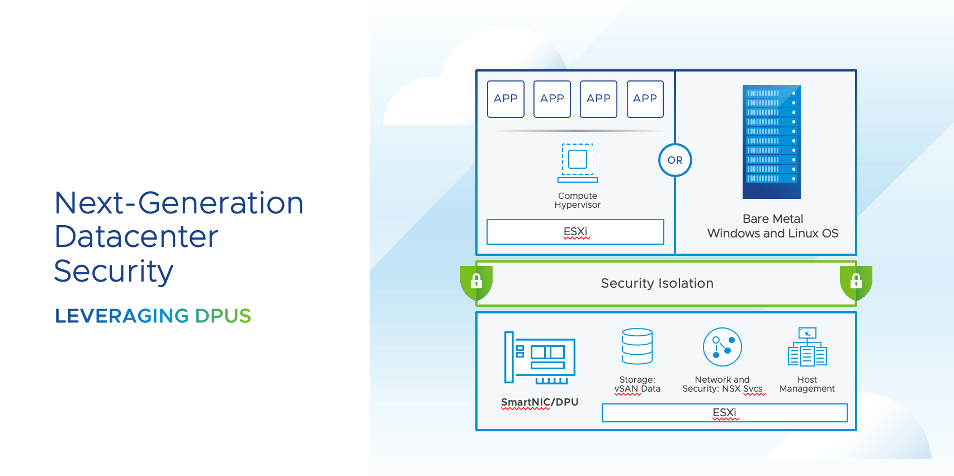

Recently, as part of the vSphere 8.0 launch at VMware Explore 2022, VMware announced the Distributed Services Engine (DSE), previously known as Project Monterey. This enhancement allows the ability to offload tasks from the hypervisor to an associated DPU, being essentially the link between deploying the hardware technology and having the hypervisor leverage it as part of the virtualized environment.

So what are some of the principal ways in which DPUs can help redefine the security architecture?

- Deep Packet Inspection (DPI): DPUs can be used to perform DPI on incoming network traffic, which allows for detailed analysis of the data payload in each packet. This can be used to detect and block malicious traffic, such as malware or hacking attempts.

- Firewall: DPUs can be used to implement firewall functionality, which can be used to block unauthorized access to the data center network.

- VPN: DPUs can be used to provide virtual private network (VPN) functionality, which can be used to secure remote access to the data center.

- Security Analytics: DPUs can be used to analyze network traffic and logs to detect security threats, such as unusual patterns of traffic or failed login attempts.

- Encryption: DPUs can be used to accelerate the encryption and decryption of network traffic, allowing the data center to maintain a high level of security while also maintaining high network performance.

The technology to perform all these tasks has existed since the days of separate appliance. Pre-network function virtualization, customers often leverage separate appliance such as VPN concentrators, separate IPS/IDS machines, and the traditional perimeter firewall.

The responsibility of providing CPU cycles for these machines was initially during the size-up of these appliances and could only be swapped out for new ones when throughput or processing limits were being reached. Network function virtualization moved that compute responsibility to the data center team, and now CPU cycles that would be dedicated to the workload would be shared with the infrastructure appliances.

When offloading these security functions from the host CPU, DPUs can help improve the performance and scalability of data center security systems while reducing the load on the host CPU, but not suffering from the same limitation as deploying full-size appliances. Customers still retain the flexibility of the virtualized network and security function but without having a workload fight for computing power.

Conclusions and Future Steps

DPUs are becoming more common in modern data centers as they help to improve the performance and security of data center networks while also reducing the load on the host CPU. They can be a valuable addition to any data center infrastructure looking to optimize performance and security.

The future that customers look at is one where not only can they leverage hardware acceleration to all the hosts in the cluster, and thus allowing all apps to leverage the acceleration, but it’s also about isolation.

An additional layer of protection is added to the network where the *way* infrastructure is being run is completely decoupled from *what* data flows through said infrastructure. This means robbing cyber attackers of yet another powerful tool.

Embedding security at the device also aligns beautifully with the concept of DevSecOps, where developers have a mindset of thinking about security at the beginning of the software application development process rather than being worked on later on as an afterthought.

This also reduces the likelihood that security features will degrade application performance, and DPU companies like NVIDIA’s approach to moving security responsibilities over to its DPU so that GPU and CPUs are less taxed dovetails well with that philosophy.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.