The recent posts on vSAN capacity topics (“Demystifying Capacity Reporting in vSAN” and “Understanding Reserved Capacity Concepts in vSAN”) have helped answer a lot of the key questions many of our customers had about how vSAN reports data usage. But there is another aspect to storage consumption and reporting that often gets overlooked: Space reclamation.

Let’s explore what occurs on an underlying shared storage system when there is guest VM storage activity, and why you may want to enable and run reclamation processes regularly in your environment. This post supplements the TRIM/UNMAP Space Reclamation section of the recently updated vSAN Space Efficiency Technologies document.

For the sake of clarity, all references will be in Gigabytes (GB) as opposed to GiB and may be rounded up or down to simplify the discussion.

Capacity Usage with Guest VMs and Enterprise Storage Systems

When writing new data, modern guest VM operating systems will often consume new, unused space inside of a volume (VMDK) rather than space previously used by deleted data. The OS will track these pages of old blocks and report the actual used capacity accurately inside the guest. TRIM/UNMAP commands properly reclaim these unused pages and avoid the read, modify/erase, write cycles that would otherwise occur on those pages at a later time.

In a virtualized environment with thin provisioned shared storage, TRIM/UNMAP must be supported and enabled for the guest VM to pass this reclamation intelligence onto the storage system. Otherwise, the storage system will simply see that new pages are used, and as a result, a thin provisioned VMDK will grow and not honor any of the reclamations. This type of growth is most often associated with activities where a lot of new data is written to a VM, deleted, and followed by more data written to the VM.

But there is another often overlooked scenario that can erode storage capacity: Recurring updates of existing data inside the guest VM file system. This can be a result of the guest VM paging memory to disk, using temporary files, or writing to log files that perform circular updates to one or more files of a fixed size. Whether the VM is busy, or relatively dormant, most VMs demonstrate this behavior to some degree. Even though these activities occur, it is difficult to tell how much data is being updated or changed because the capacity inside the guest VM may remain largely the same.

If TRIM/UNMAP is not enabled, the process of overwriting data inside of a guest VM may slowly erode storage system capacity by reporting higher capacity usage than what is actually used by the VMs.

Examples

The examples below show five Linux VMs in a vSAN cluster. These were the same VMs used for the “Demystifying Capacity Reporting” post and provisioned with a VMDK of 100GB in size, and with about 13GB of data used. The previous post demonstrated how both provisioned space and used space would change based on the storage policy applied since vSAN allows for prescriptive levels of resilience on a per VM or even per VMDK basis.

In the last six weeks those VMs were largely dormant: Turned on, but not producing anything of substance. For this exercise only, the policy was temporarily changed to an FTT=0 which means there is no resilience of data. We discourage the use of FTT=0 for production workloads, but for this exercise, it will be easier to explain the numbers.

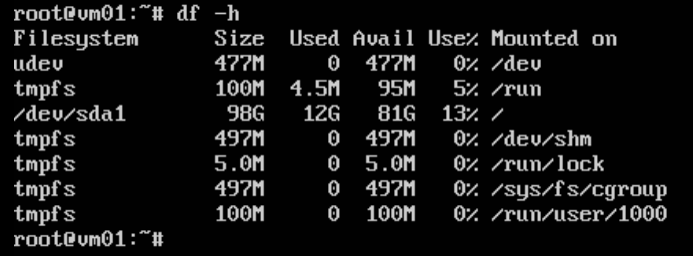

We can see in Figure 1 that VM01 reports about 12GB of data used. Note that VM capacity reported in vCenter Server will consist of more than the VMDK and thus be higher. This is because it is factoring in among other things, the power on/off state, VM memory, used VM namespace, and VMX world swap reservations into its VM capacity calculations.

Figure 1. VM capacity used as reported inside the guest VM.

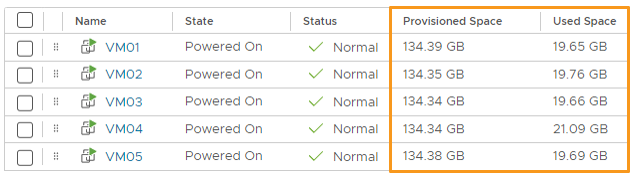

Yet, VM01 and the four other VMs like it now show “Used Space” between 23 and 24GB as shown in Figure 2. This is substantially higher than what is shown inside the guest VMs and higher than previously reported by vCenter Server many weeks ago.

Figure 2. “Used Space” noticeably more than the capacity usage reported inside the guest.

The increase in “Used Space” reported by vCenter Server is the result of the guest VMs placing this overwritten data into newly allocated pages in the VM, but vSAN not reclaiming the capacity because TRIM/UNMAP was not enabled. This increased usage will also show up in the Cluster Capacity view under the “Overview” section and the “Usage breakdown” section.

This is where space reclamation can be beneficial, where no longer used blocks can be properly reclaimed as free capacity.

vSAN Space Reclamation

vSAN supports TRIM/UNMAP processes from the guest VMs. It is not enabled by default in earlier versions of vSAN, but can be done easily using PowerCLI or RVC. Later versions of vSAN offer the ability to enable it in the UI, and it is on by default in the Express Storage Architecture. Once enabled, all guest VM reclamation processes will be passed on to vSAN, as shown in Figure 3.

Figure 3. vSAN Storage reclamation using TRIM/UNMAP

More details on enabling TRIM/UNMAP on vSAN, prerequisites, and ensuring it runs on the guest VMs can be found at the TRIM/UNMAP Space Reclamation section of the vSAN Space Efficiency Technologies document. Once enabled, the following command was run in each of the five Linux VMs displayed.

|

1 |

/sbin/fstrim --all || true |

The Linux distribution used in this example includes this command as a part of the weekly cron job, but for this demonstration, it was run manually. As Figure 4 demonstrates, there was a significant reduction of “Used Space” for each VM that the reclamation processes were run. It is now more in alignment with what the guest VM reports.

Figure 4. Reducing the “Used Space” for each VM through the TRIM/UNMAP reclamation process.

Recommendation: Be patient with vCenter Server in reporting updated numbers. Whether you are changing storage policies or performing TRIM/UNMAP operations, it may take several minutes for these new values to be reflected in vCenter Server.

Remember that “Provisioned Space” and “Used Space” reflect the resilience settings of the applied storage policy, For this post, the VMs were temporarily stored in a non-resilient state to better illustrate the accounting of capacity as seen by the guest versus vCenter Server. Figure 5 shows how the “Provisioned Space” and “Used Space” look on those same VMs noted above when using an FTT=1 through a RAID-5 erasure code, which uses 1.33x the capacity of non-resilient data.

Figure 5. Provisioned and Used Space reflecting the resilience settings of the storage policy.

The post “Demystifying Capacity Reporting” provides more detail on how these reported capacity values change based on storage policy. The section “RAID-1, RAID-5, or RAID-6 – Which is Right for You?” in the vSAN Space Efficiency Technologies document provides a summary of resilience settings and the associated space consumed on a vSAN datastore.

Summary

Virtual machines have a subtle way of consuming more space than expected through recurring write activity. The use of TRIM/UNMAP in a vSAN environment can be an important tool to not only reclaim unused storage capacity, but to reconcile capacity reporting by the VM, and vCenter Server.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.