Among the long list of enhancements introduced with vSAN 7 U1 is a subtle but important improvement to one of vSAN’s optional storage policy rules that changes how data is placed. Since data placement concepts can become complex, this post will use simple examples to describe the change and will not cover all cases. Let’s look at what changed, why, and the best way to accommodate for this change in your vSAN and VCF powered environments.

What changed

The “number of disk stripes per object” storage policy rule attempts to improve performance by distributing data contained in a single object (such as a VMDK) across more capacity devices. Commonly known as “stripe width,” this storage policy rule will tell vSAN to split the objects into chunks of data (known in vSAN as “components”) across more capacity devices which can drive higher levels of I/O parallelism if, and only if the capacity devices are a significant source of contention.

The degree of benefit with setting a stripe width will vary for the reasons discussed in “Using Number of Disk Stripes Per Object on vSAN-Powered Workloads” in the vSAN Operations Guide. Thanks to the flexibility of storage policy-based management (SPBM), an administrator can easily prescribe this setting to a specific set of VMs, test the result and revert back to the old policy setting all without any downtime.

Recommendation: When adding, changing, or removing the stripe width setting to a storage policy, allow for the resynchronization to complete before you begin observing performance differences.

Stripe Width and Data Placement Schemes

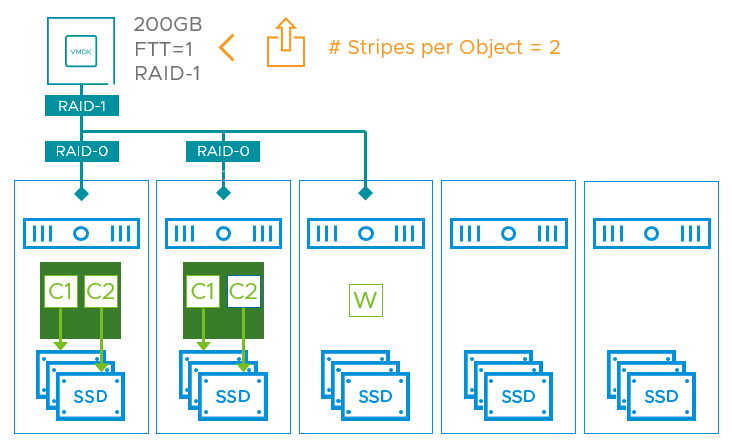

The stripe width storage policy rule allows for a value between 1 and 12. An object using a simple RAID-1 mirror and a stripe width of 1 (the default if it is not defined at all) would result in one component on at least one capacity device. An object using RAID-1 with a stripe width of 2 would result in two components across additional capacity devices, as shown in Figure 1.

Figure 1. Object using RAID-1 with a stripe width of 2, where there are 2 components per object replica.

Note that storage policy defines the minimum number of stripes. vSAN may choose to split the object component for a variety of reasons.

Prior to vSAN 7 U1, a stripe width setting would be applied to an object in different ways, depending on the data placement scheme used. For example:

- A 200GB object using a storage policy with RAID-1 and a stripe width of 4 would result in a single object comprised of 4 components on a host on one side of the RAID tree.

- A 200GB object using a storage policy with RAID-5 and a stripe width of 4 would result in an object distributed across 4 hosts totaling 16 components.

- A 200GB object using a storage policy with RAID-6 and a stripe width of 4 would result in an object distributed across 6 hosts, totaling 24 components.

With RAID-5/6 erasure codes, the 200GB object would already be dispersed (with parity) across more devices in different hosts anyway, reducing the potential for contention in that part of the stack. The example above shows how a given stripe width value applied to objects using RAID-5/6 would split the data more aggressively than a RAID-1 based object using the same stripe width value. Since the data was distributed across many hosts, the large increase in stripe width likely excessive, and beyond what may be necessary for any improvement.

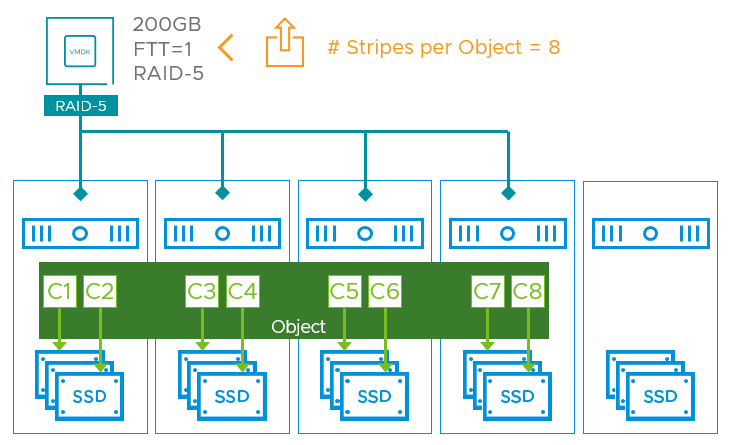

In vSAN 7 U1, while the stripe width policy has remained the same for RAID-1 objects, the setting has been improved to better accommodate objects using erasure codes: Objects that use a policy with a RAID-5 or RAID-6 erasure code will count the “stripe width” in a new way compared to objects using a RAID-1 mirror. The erasure-coded stripe with parity will be counted as a part of the applied stripe width. For example:

- A 200GB object using a storage policy with RAID-5 and a stripe width of 4 would result in an object distributed across 4 hosts totaling 4 components.*

- A 200GB object using a storage policy with RAID-5 and a stripe width of 8 would result in an object distributed across 4 hosts totaling 8 components.

- A 200GB object using a storage policy with RAID-6 and a stripe width of 6 would result in an object distributed across 6 hosts, totaling 6 components.*

- A 200GB object using a storage policy with RAID-6 and a stripe width of 12 would result in an object distributed across 6 hosts, totaling 12 components.

*The same number of components as if the stripe width rule was not used.

Figure 2. Object using RAID-5, with a stripe width of 8, where there are 2 components per host.

With vSAN 7 U1, a stripe width setting for erasure codes must be done in multiples of 4 or 6 respectively for the effective stripe width to be increased. This new method of calculation is much more practical, as a RAID-1 mirror was far more likely to need a higher stripe width value than RAID-5/6. Objects using RAID-5/6 erasure codes would most likely not benefit from stripe widths beyond 2 or 3, if at all. The table below shows the stripe width settings for vSAN 7 U1 as it relates to the data placement scheme used.

All of the stripe width values shown in the table above are “per object” just as the storage policy rule name of “Number of disk stripes per object” implies. RAID-5/6 erasure codes provides resilience of the data within a single object, while RAID-1 mirroring creates “replica objects” to provide the desire level of failure to tolerate (e.g. FTT=1, FTT=2, FTT=3). This means that for the RAID-1 mirrors, the total object count (and underlying components) is multiplied as the additional FTT level is increased. FTT=1 means there are there are two active replica objects to provide resilience. FTT=2 has three active replica objects, and FTT=3 has 4 active replica object. The stripe width settings listed above will apply to each discrete object.

Recommendation: Be conservative with the number of disk stripes. Leave the default setting (unused) unless there is reasonable suspicion that increasing the stripe width will help. If increasing the stripe width, increase it incrementally to determine if there are any material benefits. Keeping striping to a minimum will help ease data placement decision making by vSAN, especially on small, or capacity constrained clusters.

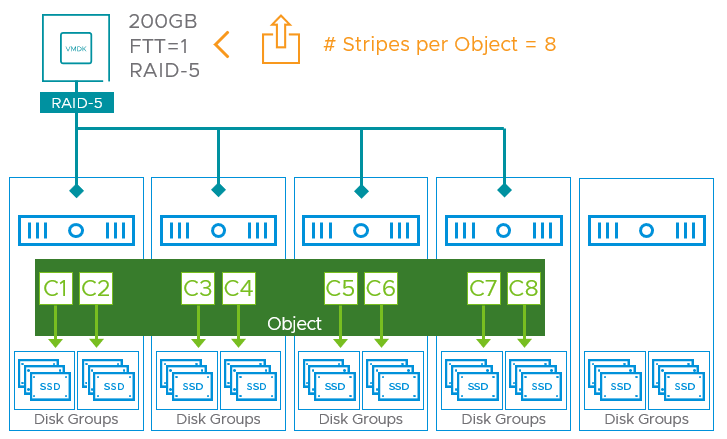

Striping and Disk Groups

Also new for vSAN 7 U1, striped objects will strive to use different disk groups of the same host. As an example, Figure 3 shows a 5 host cluster with 2 disk groups per host, a RAID-5 object with a stripe width of 8. In this configuration, since each host will have two components, it will place those components on different disk groups within the host. It is a “best-effort” feature, and will only do so if the conditions for placement can be met. This can improve the parallelization of I/Os and use more buffering and caching for a given object. This bodes well for single VMDKs under high demand.

This change applies to objects using RAID-5/6 and RAID-1. For RAID-5/6, it will not compromise any anti-affinity settings of the components to maintain resilience and availability. In other words, a RAID-5 object will always be distributed across 4 hosts at a minimum, as Figure 3 demonstrates.

Figure 3. Object using RAID-5, with a stripe width of 8, with hosts configured with multiple disk groups.

Stripe Width for Objects Greater than 2TB

vSAN 7 U1 makes a small enhancement for objects that exceed 2TB in size when a stripe width is applied to them. For the first 2TB of address space, it will be subject to the stripe width defined in the storage policy. The address space beyond that will use a stripe width that does not exceed 3. This helps vSAN better manage component counts and data placement decisions.

Summary

The optimizations introduced to the stripe width storage policy rule in vSAN 7 U1 help provide more appropriate levels of disk striping when using storage policies based on RAID-5/6 erasure codes. This, and the other improvements described here is another fine example of how the vSAN Engineering team is constantly striving to make vSAN perform better, be more efficient, and easier to manage.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.