The post “Write Buffer Sizing in vSAN when Using the Very Latest Hardware” described many of the basics around vSAN’s implementation of a two-tier storage system, and how hardware can change the performance of the system. How do software-related settings such as deduplication and compression impact performance? Let’s step through what it is, how it is implemented on vSAN, and how to better understand if and when it may impact performance.

What is it?

Data deduplication is a technique that detects when one or more duplicate blocks are found, and uses a hash table to reference a single block of a data structure, instead of storing the same block multiple times. Data compression will take a given amount of data, such as the content within a block of data, and use encoding techniques to store the data in a more efficient way. These two techniques are unrelated to each other but attempt to achieve a similar goal: Space efficiency.

The method in which space efficiency is implemented depends on the solution, and can affect the level of space savings and the effort it takes to achieve the result. No matter what the method of implementation, both deduplication and compression techniques are opportunistic space efficiency features. The level of capacity savings is not guaranteed. By contrast, data placement techniques using erasure codes like RAID-5 or RAID-6 are deterministic: They provide a guaranteed level of space efficiency for data stored in a resilient manner.

How is it implemented in vSAN?

Deduplication and compression (DD&C) in vSAN is enabled at the cluster level, as a single space efficiency feature. The process occurs as the data is destaged to the capacity tier – well after the write acknowledgments have been sent back to the VM. Minimizing any form of data manipulation until after the acknowledgment has been sent help keeps write latency seen by the guest VM low.

As data is destaged, the deduplication process will look for opportunities to deduplicate the 4KB blocks of data it finds within a disk group: vSAN’s deduplication domain. This task is followed by the compression process. If the 4KB block can be compressed by 50% or more, it will do so. Otherwise, it will leave as-is, and continue destaging the data to the capacity tier.

Figure 1. Data deduplication and compression during the destaging process

Implementing DD&C in this manner prevents the performance penalties found with inline systems that perform the deduplication prior to sending the write acknowledgment back to the guest. It also avoids the challenges of deduplicating data already at rest. While the DD&C process occurs after the write acknowledgment is sent to the guest VM, enabling it in vSAN can impact performance under certain circumstances, which will be discussed below.

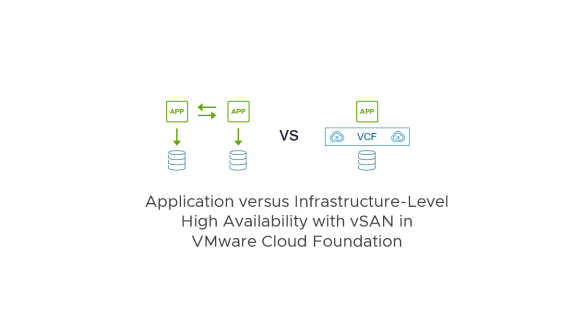

Two-Tier Storage System Basics

vSAN’s is a two-tier distributed storage system. Incoming data is written to a write buffer with the write acknowledgment sent immediately back to the guest for optimal performance, and funneled down to the capacity tier at a time and frequency determined by vSAN. This architecture provides a higher level of storage performance while keeping the cost per gigabyte/terabyte of capacity reasonable.

Figure 2. Visualizing a two-tier system like a funnel

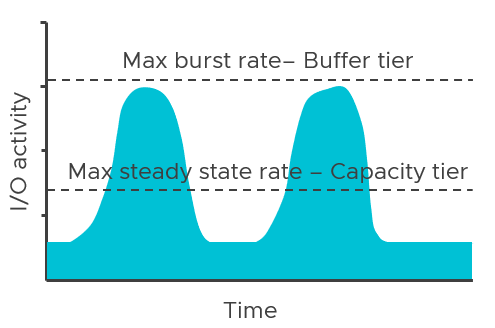

A two-tier system like vSAN has two theoretical performance maximums. A burst rate, representing the capabilities of buffer tier, and the steady-state rate, representing the capabilities of the capacity tier. The underlying hardware at each tier has a tremendous influence on the performance capabilities of each tier, but software settings, applications, and workloads can impact performance as well.

Figure 3. Visualizing the theoretical maximums of a two-tier system using a time-based graph

The performance maximums of your vSAN hosts will be somewhere in between the maximum burst rate, and the maximum steady-state rate. Synthetic testing for long periods using HCIBench will stress the environment enough to show these approximate values when looking at the time-based graphs. Production workloads may hit these maximums in an undersized design.

It’s Potential Impact on Performance

Deduplication and compression require effort: Computational effort and the use of RAM and additional I/O that stems from it. This is true regardless of how it is implemented. It just depends on when, where, and how it occurs. In vSAN, since this effort occurs once the data in the write buffer begins to destage, the task reduces the effective destaging throughput to the capacity tier. This would lower the maximum steady-state rate that the cluster could provide. In other words, a cluster with DD&C enabled may have similar performance to a cluster with DD&C deactivated that use much lower performing capacity tier devices.

Figure 4. Destaging and maximum steady-state rates are reduced when DD&C is enabled

Assuming all other variables remained the same, lowering the maximum steady-state rate of the capacity tier would demonstrate the following behaviors

- The write buffer may fill up more quickly because delta in performance between the two tiers has been increased through slowing down the performance of the capacity tier.

- The write buffer will destage more slowly because of the reduced destaging performance.

- The write acknowledgment time (write latency) of the guest VMs may be affected if destaging has begun. The degree of impact depends on several factors, including the destage rate capable by the capacity tier. This scenario is most common if the aggregate working set of data far surpasses the capacity of the buffer tier, or a fast duty cycle, which places more significant demands on the capacity tier.

- The write acknowledgment time (write latency) of the guest VM will be unaffected IF the buffer has not reached any destaging thresholds. This would be common with a small aggregate working set of data that fits well within the buffer tier and not a lot of pressure on the buffer to destage.

vSAN’s elevator algorithms detect a variety of conditions that will help determine if, when, and how much destaging should occur. It gradually introduces the destaging of data, and does not go as fast as it can, but only as fast as it needs to. This helps keep hot data in the buffer for subsequent overwrites, which reduces unnecessary destaging activity and potential impacts on performance.

RAID-5/6 erasure coding is another space efficiency option enabled at the per VM or per VMDK basis using storage policies. Using space efficiencies techniques together may also have some notable impacts. See “Using Space-Efficient Storage Policies (Erasure Coding) with Clusters Running DD&C” (5-12) in the vSAN Operations guide for more information.

Customization Options

vSAN’s architecture gives customers numerous options to tailor their clusters to meet their requirements. Design and sizing correctly means clearly understanding the requirements and priorities. For example, in a given cluster, is capacity the higher priority, or is it performance? Are those priorities reflected in the existing hardware and software settings? Wanting the highest level of performance but choosing the lowest grade componentry while also using space efficiency techniques is a conflict in the objective. Space efficiency can be a way to achieve capacity requirements but comes with a cost.

Once the priorities are established, adjustments can be made to accommodate the need of the environment. This could include:

- Faster devices in the capacity tier. If you enabled DD&C and notice higher than expected VM latency, consider faster capacity devices at the capacity tier. This can help counteract the reduced performance of the capacity tier when DD&C is enabled. Insufficient performing devices at the capacity tier is one of the most common reasons for performance issues.

- Use more disk groups. This will add more buffer capacity, increasing the capacity for hot working set data, and reduce the urgency at which data is destaged. Two disk groups would be the minimum, with three disk groups the preferred configuration.

- Look at newer high-density storage devices for the capacity tier to meet your capacity requirements. Assuming they meet your performance requirements, these new densities may offset your need to use DD&C. See vSAN Design Considerations – Using Large Capacity Storage Devices for more information.

- Run the very latest version of vSAN. Recent editions of vSAN have focused on improving performance for clusters running DD&C: Improving the consistency of latency to the VM, and increasing the destage rate through software optimizations.

- Enable only in select clusters. Only enable DD&C in clusters that hardware that can provide sufficient performance to support the needs of the workloads. Optionally, space-efficient storage policies like RAID-5/6 could be applied to discrete workloads where it makes the most sense. Note there are performance tradeoffs with erasure coding as well.

Summary

Deduplication and compression is an easy and effective space efficiency feature. The design of DD&C in vSAN provides a data path that strives to keep guest VM latency low. While vSAN’s two-tier architecture helps minimize impacts on performance, an insufficient hardware configuration or a collection of high-demand workloads may overwhelm the configuration enough to where VMs may see increased latency. Thanks to the flexibility of vSAN, you can tailor your settings and hardware configurations to accommodate the demands of your workloads.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.