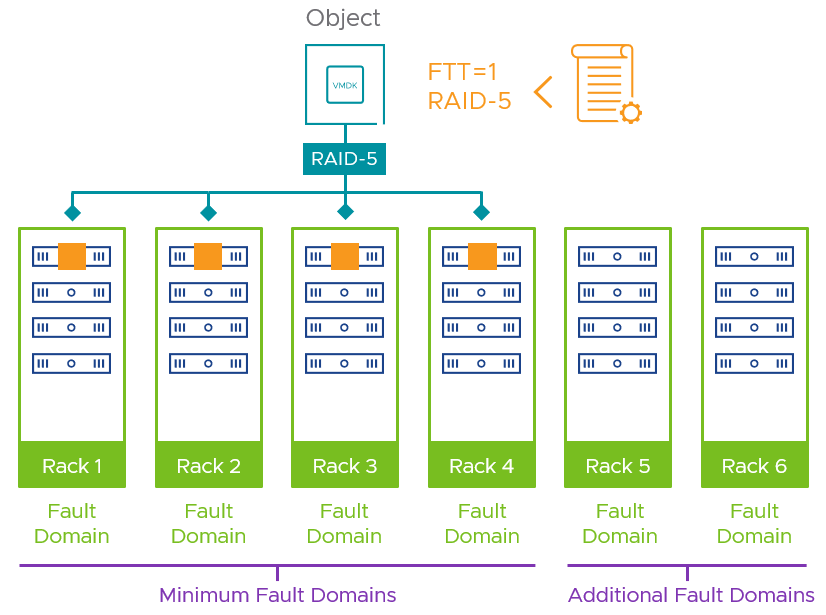

The fault domains feature in vSAN is an optional configuration option to improve the resilience of a cluster based on your topology. It ensures that data in the cluster remains available in the event of a single host, or a group of hosts, such as a rack or a data closet are unavailable. By logically associating a group of hosts in a vSAN cluster together as shown in Figure 1, vSAN adjusts where it places data to maintain the levels of resilience in accordance with the storage policy prescribed to a given object, such as a VMDK.

Figure 1. Fault domains protect against different types of failures

The objective of fault domains is simple, and so is the configuration of them. Yet there are design and operational considerations that occasionally are overlooked.

- How many fault domains are recommended in a vSAN cluster?

- How many hosts are recommended within a fault domain?

- How symmetrical do these fault domains need to be?

- How is slack space and free space impacted when using fault domains?

- How do fault domains compare to the use of multiple clusters?

While the vSAN Design and Sizing Guide and the vSAN Operations Guidance document provides recommendations on the design and operation of fault domains, let’s address these questions below.

How many fault domains are recommended in a vSAN cluster?

The answer to this comes from another question. Which level of failure to tolerate (FTT) that you plan on using in this cluster requires the most amount of hosts to comply? Are you planning on using no more than an FTT=1 via RAID-1 mirroring? That would require at least 3 fault domains. Do you have some objects that require FTT=2 using RAID-6? That would require a minimum of 6 fault domains.

This helps us define the absolute minimum fault domains required to run a given storage policy, but that is not the recommended number. Just as with host counts in non-fault domain enabled vSAN clusters, we recommend an N+1 strategy at a minimum: meaning one more fault domain than the minimum required, as shown in Figure 2. This would allow vSAN to automatically heal itself in the event of a total failure of a fault domain, as it would have an available fault domain for a rebuild: regaining the level of resilience assigned by the storage policy.

Figure 2. Using and N+1 strategy or greater when using fault domains

If the requirements of your organization are the motive for interest in this feature, then factoring in an N+1 or greater design is a prudent step.

How many hosts are recommended within a fault domain?

This decision determines the free resources available for rebuilding data if there was just one host within a fault domain that failed (as opposed to an entire FD failure) and there are no other fault domains for a rebuild target. One could use as few as one host per fault domain, but that would defeat the purpose of the feature. Two hosts could be used but may be unable to provide sufficient capacity in the event of a single host failure within that fault domain, and no other fault domains available. Three hosts within a fault domain is a realistic starting point, as this configuration will be less likely to run into capacity issues upon a single host outage when there are no other fault domains available for regaining storage policy compliance for an object. The number of hosts within a fault domain becomes less important as the number of fault domains (beyond the minimum required) increases.

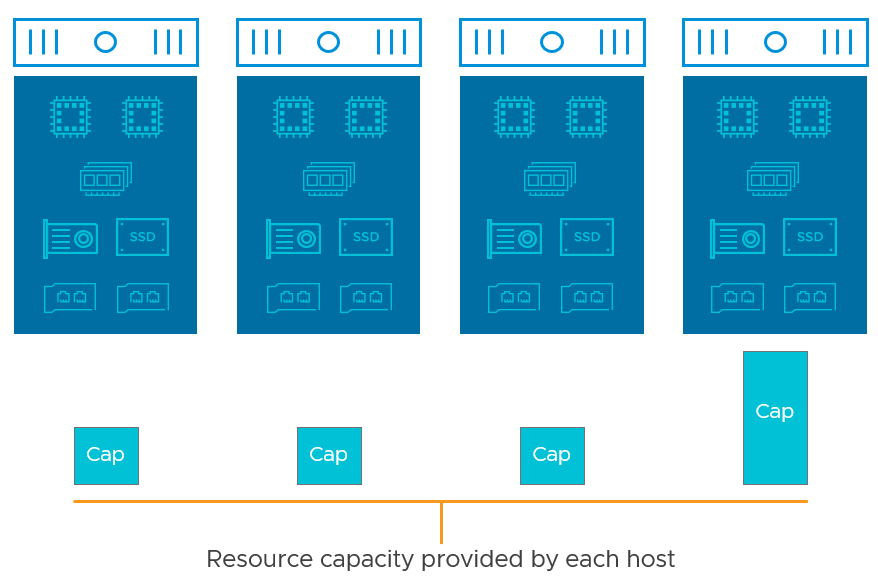

How symmetrical do fault domains need to be?

vSAN does not require strict symmetry of hosts across a cluster. An even level of resources across each host is a good practice for any clustering resource type, whether it be CPU, memory, or storage resources in vSAN. The symmetry of hosts drastically reduces complexity in ensuring sufficient resources are available upon failure. This is especially true with clusters consisting of very few hosts. A failure of one host providing a disproportionally large amount of resources, as shown in Figure 3, makes it problematic if that host fails, and having sufficient resources elsewhere.

Figure 3. An example of asymmetrical hosts in a vSAN cluster

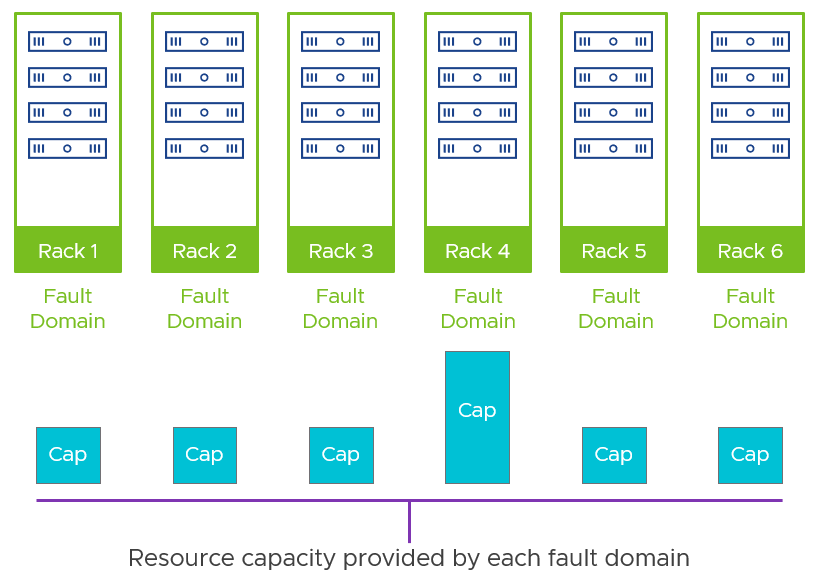

The recommendation around symmetry of hosts – especially for vSAN clusters with very few hosts – is the same reason why symmetry is highly recommended across fault domains. Symmetry, in this case, would apply to host specifications, and host count per fault domain. Having fault domains that are asymmetrical in resources is akin to that of a very small asymmetrical cluster. When failure occurs, it may have a more difficult time to place data due to this asymmetry.

Figure 4. An example of asymmetrical fault domains

How is slack space and free space impacted when using fault domains?

References to “free space” is often thought of as a percentage of available capacity across the cluster. vSAN views free space at a much more discrete level: Hosts, disk groups, and even individual disks. Fault domains introduce another placement and “free space” constraint for vSAN. Under any failure condition (using fault domains or not), vSAN looks for a location to repair the data that does not overlap with the other copy of data. Maintaining 25-30% of capacity as free space for these transient activities is still advised for clusters using fault domains.

If deduplication and compression are used, the same considerations apply for clusters using fault domains just as they do with clusters not running fault domains. It is an opportunistic space-efficiency feature. Data movement that occurs with activities like storage policy changes and host failures means that the effective level of capacity savings is never guaranteed.

How do fault domains compare to the use of multiple clusters?

The prerequisites for fault domains means that they are usually enabled on clusters that have a moderate to large number of hosts. If an organization is looking at using fault domains, and have come up with a design (number of fault domains x number of hosts in each), then a fair question should be asked as a follow-up: Can the desired outcome be achieved through the use of multiple vSAN cluster not running fault domains, and is it a better given the requirements?

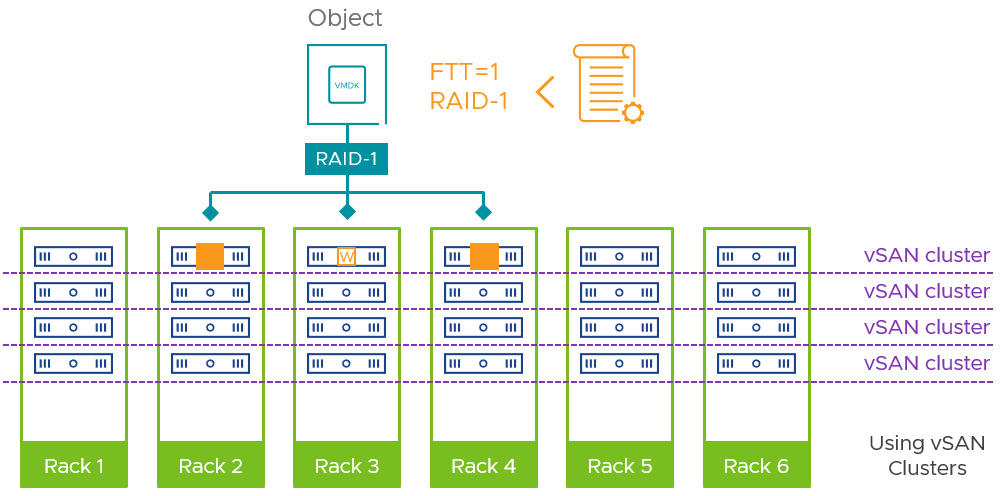

In one scenario, shown in Figure 5, rack-level protection could be achieved by using multiple clusters instead of the fault domains feature. A cluster would consist of just one host per rack, thereby creating a shallow (one host deep) implicit fault domain.

Figure 5. Using smaller vSAN clusters spanning across racks, instead of the fault domains feature

This certainly does not provide the interesting resilience capacities found in the fault domains feature in vSAN, in that there would always be a limit of one host per rack within a cluster. Multiple clusters do provide another unique benefit: A smaller operational and maintenance domain. Cluster services (deduplication and compression, encryption, etc.) could be tailored to a smaller set of hosts, and it makes maintenance and unplanned events easier to manage. For more information, see the document, vSAN Cluster Design – Large Clusters Versus Small Clusters.

Summary

Fault domains are an availability feature of vSAN that provide protection to not only host failures, but against an entire collection of hosts, be it a rack, closet, or whatever you define the boundary of failure. Here are some key take-aways about the design and operation of the fault domains feature in vSAN.

- Evaluate if protection through the use of fault domains is required for your environment.

- Always implement at least one more fault domain than the minimum required. This is similar to the guidance we have about hosts in a vSAN cluster not using explicit fault domains.

- Ensure a sufficient number of hosts within each fault domain to support the potential of a host failure, and being able to absorb all of that data in another host or hosts in that same fault domain.

- A cluster with more fault domains and fewer hosts within that fault domain will tend to be more flexible than fewer fault domains with more hosts.

- Ensure as much symmetry as possible for CPU, memory, and storage capacity to simplify failure scenarios.

Discover more from VMware Cloud Foundation (VCF) Blog

Subscribe to get the latest posts sent to your email.