The introduction of VMware Cloud on Amazon Web Services (AWS) in 2017 marked a special moment for VMware customers. It meant that customers could easily run their workloads powered by the same suite of software-defined data center products in AWS just as they do in private cloud environments on premises. With a hardware and software stack operated by VMware, it gave customers an ability to expand their on-premises environment into the cloud or run their workloads exclusively on hardware and software owned and managed by someone else.

VMware Cloud on AWS continues to improve all aspect of the platform, including performance and resilience. While these improvements come incrementally with each release, they add up to impressive improvements when looked at over the course of several releases. Let’s look back and see how much these incremental improvements have provided customers in terms of performance and resilience of their workloads running in VMware Cloud on AWS.

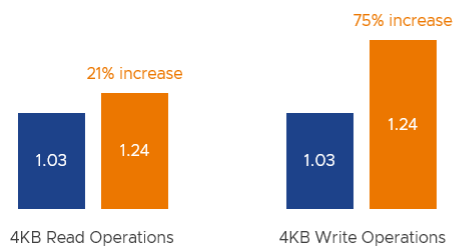

Comparing the 1.03 Release to the 1.24 Release

Throughout the past six years, we have been able to track the iterative improvements delivered with each release. Complex validation testing ensures that new features and capabilities always result in a better platform for our customers. In the world of solution platforms, “better” can mean different things. While ease-of-use and other characteristics are most certainly important, characteristics such as performance and operational efficiency are much easier to measure improvement. The data below represents a comparison between the 1.03 release debuting in March 7th, 2018 versus the 1.24 release, available as of November 14th, 2023 using the same i3.metal instance type.

I/O Performance

Small I/Os. Read and write operations that consist of small I/Os are sometimes considered the easier I/O type for a storage system to process. They do present their own challenges by saturating internal queues and buffers, and remain an important I/O type for serialized applications. On the 1.24 release, 4KB read operations show a 21% increase over the 1.03 release, with 4KB write operations show a 76% increase over the 1.03 release.

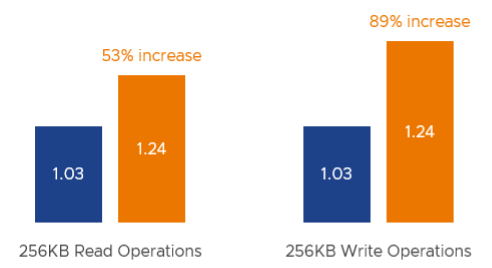

Large I/Os. Read and write operations that consist of large I/O sizes are typically the most challenging for storage systems. They are requesting or committing a massive amount of data for a proportionally small number of read and write commands. This may put a burden on the physical bandwidth of the network and the server hardware. When we compare large I/O operations consisting of 256KB reads and writes, we see significant improvements. On the 1.24 release, 256KB read operations show a 53% increase over the 1.03 release, and 256KB write operations show an 89% increase over the 1.03 release.

The improvement in I/O performance has an obvious benefit in that the hosts will be able to process more I/O commands (IOPS) and more data payload (throughput), workloads can collectively achieve higher levels of performance if the applications are demanding it. But the often overlooked and perhaps even more important is the improved consistency in performance. Latency will be more consistent because the storage system can satisfy read and write operations more quickly, reducing the period the applications may be contending for resources.

Operational Efficiency

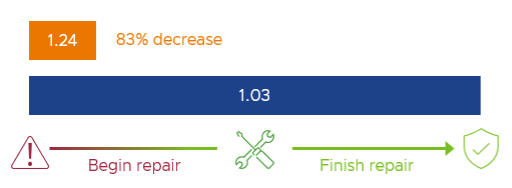

The benefits of improved performance are not limited to just better performance for your workloads. These enhancements translate to real-world improvements in operational efficiency. For example, our telemetry data shows that when comparing the 1.03 release with the 1.24 release, the time it takes the system to repair from the impact of a hardware failure has been reduced by over 80%. These repair processes are fully automated and transparent to the consumers of the applications, and those who administer the workloads.

A reduced time to regain prescribed levels of resilience means a more robust platform. But recovering from hardware failures faster has another benefit. Shared resources become more readily available for your workloads. This improves the times in which peak performance can be achieved and improves the consistency of performance provided by the system.

What’s Next

In November of 2023, we announced support of the vSAN Express Storage Architecture (ESA) for VMware Cloud on AWS. Paired with the new i4i.metal instance type, this will usher in all new levels of performance and efficiency for customers. Workloads can run faster and consume fewer resources which translates to lower costs.

Summary

The improvements in performance and operational efficiency for VMware Cloud on AWS demonstrates precisely why a software defined infrastructure is a superior way to provide the platform for your workloads, whether it is on premises, in the cloud, or a combination of both. Be sure to visit vmc.techzone.vmware.com to learn more about VMware Cloud on AWS and stay informed on the latest updates.

The post Continuous Performance Improvements in VMware Cloud On AWS appeared first on VMware Cloud Blog.