Many of our customers use IPv6 networks in their datacenters for a variety of reasons. We expect that many more will transition from IPv4 to IPv6 to reap the large address range and other benefits that IPv6 provides. Keeping this in mind, we have worked on a number of performance enhancements for the way that vSphere 5.5 manages IPv6 network traffic. Some new features that we have implemented include:

• TCP Checksum Offload: For Network Interface Cards (NICs) that support this feature, the computation of the TCP checksum of the IPv6 packet is offloaded to the NIC.

• Software Large Receive Offload (LRO): LRO is a technique of aggregating multiple incoming packets from a single stream into a larger buffer before they are passed higher up the networking stack, thus reducing the number of packets that have to be processed and saving CPU. Many NICs do not support LRO for IPv6 packets in hardware. For such NICs, we implement LRO in the vSphere network stack.

• Zero-Copy Receive: This feature prevents an unnecessary copy from the packet frame to a memory space in the vSphere network stack. Instead, the frame is processed directly.

vSphere 5.1 offers the same features, but only for IPv4. So, in vSphere 5.1, services such as vMotion, NFS, and Fault Tolerance had lower bandwidth in IPv6 networks when compared to IPv4 networks. vSphere 5.5 solves that problem—it delivers similar performance over both IPv4 and IPv6 networks. A seamless transition from IPv4 to IPv6 is now possible.

Next, we demonstrate the performance of vMotion over a 40Gb/s network connecting two vSphere hosts. We also demonstrate the performance of networking traffic between two virtual machines created on the vSphere hosts.

System Configuration

We set up a test environment with the following specifications:

• Servers: 2 Dell PowerEdge R720 servers running vSphere 5.5.

• CPU: 2-socket, 12-core Intel Xeon E5-2667 @ 2.90 GHz.

• Memory: 64GB memory; 32GB spread across two NUMA nodes.

• Networking: 1 dual-port Intel 10GbE and 1 dual-port Broadcom 10GigE adapter placed on separate PCI Gen-2 x8 lanes in both machines. We thus had 40Gb/s of network connectivity between the two vSphere hosts.

• Virtual Machine for vMotion: 1 VM running Red Hat Enterprise Linux Server 6.3 assigned 2 virtual CPUs (vCPUs) and 48GB memory. We migrate this VM between the two vSphere hosts.

• Virtual Machines for networking tests: A pair of VMs running Red Hat Enterprise Linux server 6.3, assigned 4 vCPUs and 16GB memory, on each host. We use these VMs to test the performance of networking traffic between two VMs.

We configured each vSphere host with four vSwitches, each vSwitch having one 10GbE uplink port. We created one VMkernel adapter on each vSwitch. Each VMkernel adapter was configured on the same subnet. The MTU of the NICs was set to the default of 1500 bytes. We enabled each VMkernel adapter for vMotion, which allowed vMotion traffic to use the 40Gb/s network connectivity. We created four VMXNET3 virtual adapters on the pair of virtual machines used for networking tests.

Methodology

In order to demonstrate the performance for vMotion, we simulated a heavy memory usage footprint in the virtual machine. The memory-intensive program allocated 48GB memory in the virtual machine and touched one byte in each page in an infinite loop. We migrated this virtual machine between the two vSphere hosts over the 40Gb/s network. We used net-stats to monitor network throughput and CPU utilization on the sending and receiving systems. We also noted the bandwidth achieved in each pre-copy iteration of vMotion from VMkernel logs.

In order to demonstrate the performance of virtual machine networking traffic, we use Netperf 2.60 to simulate traffic from one virtual machine to the other. We create two connections for each virtual adapter. Each connection generates traffic for the TCP_STREAM workload, with 16KB message size and 256KB socket buffer size. As in the previous experiment, we used net-stats to monitor network throughput and CPU utilization.

Results

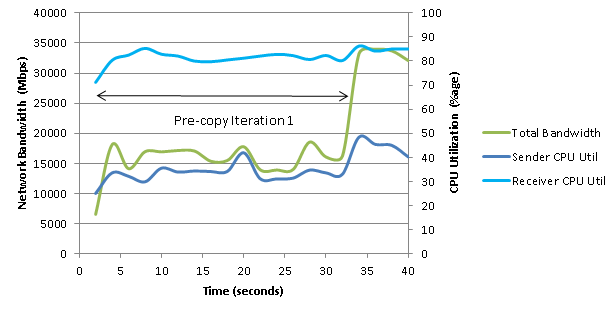

Figures 1 and 2 show, for IPv4 and IPv6 traffic, the network throughput and CPU utilization data that we collected over the 40-second duration of the migration. After the guest memory is staged for migration, vMotion begins iterations of pre-copying the memory contents from the source vSphere host to the destination vSphere host.

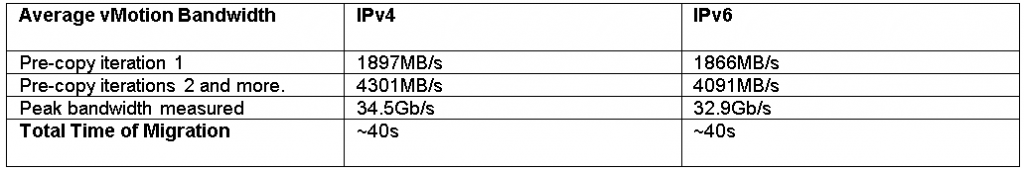

In the first iteration, the destination vSphere host needs to allocate pages for the virtual machine. Network throughput is below the available bandwidth in this stage as vMotion bandwidth usage is throttled by the memory allocation on the destination host. The average network bandwidth during this phase was 1897 megabytes per second (MB/s) for IPv4 and 1866MB/s for IPv6.

After the first iteration, the source vSphere host sends the delta of changed pages. During this phase, the average network bandwidth was 4301MB/s with IPv4 and 4091MB/s with IPv6.

The peak measured bandwidth in netstats was 34.5Gb/s for IPv4 and 32.9Gb/s for IPv6. The CPU utilization of both systems followed a similar trend for both IPv4 and IPv6. Please also note that vMotion is very CPU intensive on the receiving vSphere hosts, and high CPU clock speed is necessary to achieve high bandwidths. The results are summarized in Table 1. In all, migration of the virtual machine was complete in 40 seconds regardless of IPv4 or IPv6 connectivity.

Figure 1. vMotion over an IPv4 network

Figure 2. VMotion over an IPv6 network

Table 1. vMotion results—IPv4 versus IPv6

The results for virtual machine networking traffic are in Table 2. While the throughput with IPv6 is about 2.5% lower, the CPU utilization is the same on both the sending as well as the receive sides.

Table 2. Virtual machine networking results—IPv4 versus IPv6

Thanks to a number of IPv6 enhancements added to vSphere 5.5, migrations with vMotion occur over IPv6 networks at speeds within 5%, compared to those over IPv4 networks. For virtual machine networking performance, the throughput of IPv6 is within 2.5% of IPv4. In addition, testing shows that we can drive bandwidth close to 40Gb/s link speeds with both protocols. Combined, this functionality allows for a seamless transition from IPv4 to IPv6 with little performance impact.